SaaS Convenience, On-Prem Security: Announcing the Saddle Data Remote Agent

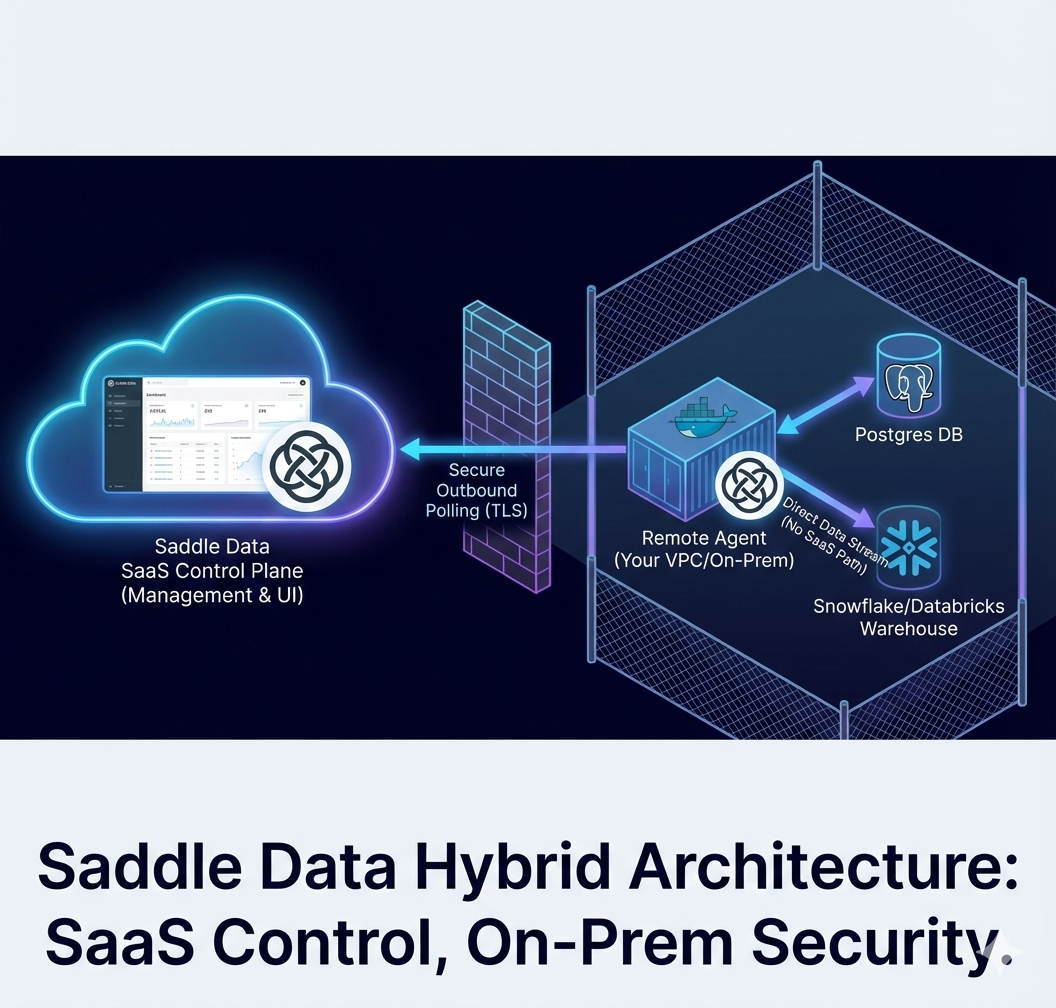

TL;DR: You can now run Saddle Data’s extraction and loading logic inside your own infrastructure via a Dockerized Remote Agent. This allows you to keep your database behind your firewall, keep credentials local, and maintain a “Zero Trust” posture while still using our SaaS control plane for management and observability.

The hardest part of adopting a new data tool isn’t the technical implementation. It’s the security review.

If you work in Fintech, Healthtech, or any regulated industry, the conversation usually goes like this:

Engineering: “We want to use this new SaaS ETL tool to move data from our production Postgres to Snowflake.” Security/SRE: “Great. How does it connect?” Engineering: “We need to whitelist their IP range and give them read access to the production replica.” Security/SRE: “Absolutely not.”

The conflict is real. Engineering wants the ease of use and low maintenance of SaaS. Security demands the control and isolation of on-prem software.

Today, we are resolving that conflict. We are introducing the Saddle Data Remote Agent.

The Hybrid Data Plane Architecture

We have decoupled our platform into two distinct parts: the Control Plane and the Data Plane.

- The Control Plane (SaaS): This is hosted by Saddle Data. It’s the web UI where you design pipelines, set schedules, view logs, and manage users.

- The Data Plane (Remote Agent): This is the “worker” that actually executes the data movement. It is a lightweight Docker container that you run inside your own environment (VPC, on-prem data center, or Kubernetes cluster).

This hybrid approach gives you the best of both worlds: the management experience of a modern SaaS app, with the security posture of a self-hosted tool.

How It Works (The “Zero Trust” Technical Deep Dive)

The Remote Agent changes the fundamental relationship between your infrastructure and ours. Instead of our cloud reaching in, your agent reaches out.

Here is the flow:

- No Inbound Ports: You deploy the Remote Agent Docker container behind your firewall. You do not need to open port 5432 (or any other port) to the public internet.

- Outbound Polling: The agent establishes a secure, outbound-only TLS connection to the Saddle Data Control Plane. It “polls” for work, asking: “Are there any jobs scheduled for me?”

- Local Credentials: Your database credentials (username/password for Postgres, API keys for Stripe) are injected directly into the Remote Agent container as environment variables or via your Secrets Manager (e.g., AWS Secrets Manager, HashiCorp Vault). Saddle Data’s cloud servers never see or store your source credentials.

- Direct Streaming: When a job runs, the Agent connects locally to your source, extracts the data, buffers it in memory, and streams it directly to your destination (e.g., Snowflake or Databricks). The customer data does not pass through Saddle Data’s servers.

Why SecOps Will Love This

- Firewall Integrity: Keep your “deny all inbound” policy intact.

- Data Sovereignty: Your customer PII never leaves your controlled VPC until it hits your controlled warehouse.

- Reduced Attack Surface: Since we don’t hold your database keys, a breach of our SaaS layer does not compromise your production data.

Getting Started

The Remote Agent is available immediately for capabilities like Postgres to Snowflake/Databricks movement. It also supports running our new dbt Core integration directly inside your infrastructure for secure transformations.

Running the agent is as simple as spinning up a Docker container with your unique Agent Key.

Check the documentation for deployment guides on AWS ECS, Kubernetes, and standard Docker Compose.